B2B Email Metrics Part Two – The most important metrics

I explained in part one about the Big Open Rate Lie and why that means Open Rate is a bad metric for a B2B email campaign. The good news is that there are plenty of other metrics that you can – and should – use to measure B2B email campaign performance. I’ll go through them briefly now, starting with the most important.

Email campaign metric #1: Spam Complaint Rate

Your email campaign system should be able to tell you how many spam complaints – that is, manual complaints raised by humans, not automatic spam filters – your campaign has generated. So if for example you sent 1,000 emails and generated 1 spam complaint, your spam complaint rate (SCR) is 1/1,000=0.1%.

In a perfect world, a compliant and ethical marketer should never get a spam complaint. All your email addresses should be correctly opted-in. And every recipient should recognise the emails you send as legitimate and valuable messages.

In reality, one can’t avoid the occasional spam complaint. There can be misunderstandings. Or someone might end up on your email database by mistake. Or in an extreme situation a recipient might maliciously raise a spam complaint. So your SCR won’t be exactly zero. But it should be a very, very low number. I’d see an SCR even as low as 0.1% as a cause for concern.

If your campaigns generate any significant number of spam complaints it’s likely your email campaign provider will warn you that your account might be paused or even suspended. This is because email service providers have to protect the reputation of their systems for all of their subscribers, and spam complaints harm that reputation. But don’t wait for a warning and risk a ban. Monitor every campaign send for spam complaints. If you notice any hint of an uptick in spam complaints, take some action to improve your processes and the quality of your database.

Email campaign metric #2: hard bounce rate

Your email campaign system should tell you this number for each send.

A “hard bounce” means you sent an email to an address that cannot receive it for some permanent reason. (This is distinct from a “soft bounce” which happens with a temporary problem, like a mail server being temporarily down for maintenance.)

This shouldn’t happen very often, but you will naturally get a few hard bounces for a B2B email campaign. For example, perhaps a person has left their job and their corporate email address has been deactivated.

As with spam complaints, your hard bounce rate should normally be a very small percentage – ideally 0.1% or lower. But there are some circumstances when it might be a little higher, especially if you are sending to a list that hasn’t been used in a while.

Most email systems will automatically remove or suppress a contact that generates a hard bounce. If yours doesn’t do this, or if you are using multiple email systems (so that a hard bounce detected by one system might not be removed from the other systems’ lists), you might need to take manual steps to remove or suppress hard bounces.

Any increase in hard bounces will often cause a warning from your email system provider, because it’s a signal of potential spamminess and bad contact list hygiene. Don’t keep sending to a list that will cause hard bounces.

Monitor your hard bounce rate for every campaign send and take action if you see a spike.

If you need to send to a B2B email list that’s not been used in a while, consider using a list checking service like Neverbounce to reduce the risk of excessive hard bounces.

Email campaign metric #3: unsubscribe rate

Again the unsubscribe rate for each campaign should be reported by your email campaign system. If you send, say, 1,000 emails, and two people unsubscribe, then your unsubscribe rate is 2/1,000 = 0.2%.

Getting occasional unsubscribes from a B2B email list is a fact of marketing life. It’s not necessarily saying something is wrong. Perhaps a person has changed job role and your product is no longer relevant to them. Perhaps a person is moving to a different company and intends to resubscribe with their new corporate email address. But a high unsubscribe rate is a danger sign – perhaps your emails are too boring, or too frequent, or insufficiently targeted.

In general I’d like to see an unsubscribe rate as a very low number, say 0.2% or lower. But there are some circumstances in which it might be a little higher. In particular if you’ve made a big change to your product or service, or if you are emailing a “cool” list that hasn’t been used for quite a while, you may see a higher level of unsubscribes at first.

Keep an eye on unsubscribe rates and take action if you see a sustained high level or any sudden increase.

Email campaign metric #4: clickthrough rate

The clickthrough rate (CTR) is a well-named metric – it’s the number of times that a link in your email is clicked.

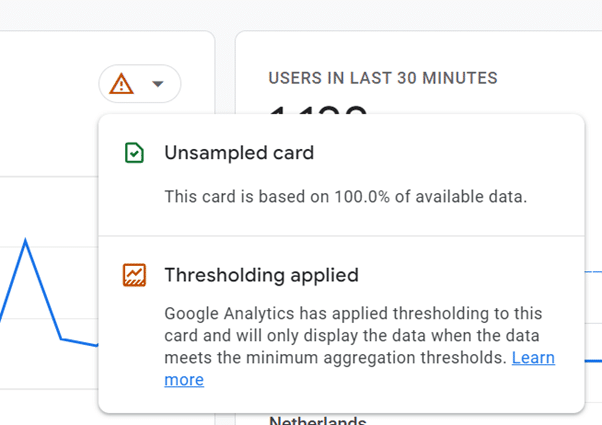

Most email marketing systems will report on this number. But in some cases you might need to use a separate analytics platform (like Google Analytics) to measure it.

There are some nuances here. For example, if the same person clicks on 5 different links in an email that you send, do you count that as 5 clicks or only 1 for the purposes of measuring CTR? Different systems will handle this in different ways.

Clickthrough rate is a useful measure but it’s very hard to give any general guidance about a “good” CTR for B2B email campaigns. Some emails are very self-contained and informational. They don’t need to be clicked on in order to achieve their marketing objectives. Other emails are trying hard to get someone to take action by clicking on a link. In those cases, the likely CTR depends very much on the level of commitment required by the call to action – “click here to read more” is likely to get a lot more clicks than “click here to contact sales”.

So please measure and monitor the CTRs from your B2B email campaigns, but be aware there’s no set target value for CTR. Rather you should compare CTRs from similar emails with similar calls-to-action over time. If you have a big enough email list and send to it consistently over a long period of time, differences in CTRs may help you to identify better- or worse-performing content.

Email campaign metric #5: post-click activity

If your B2B email campaign is primarily designed to get a prospect to take some action, then “post click activity” is the ultimate measure of its value. That is: when a person not only clicks on an email, but also goes on to take the desired follow-up action. This might be, for example, filling in a form to download a white paper, or booking a slot on a webinar, or asking for a sales callback. Often this activity would be considered a “conversion” of some sort.

Some email marketing systems have facilities for tracking post-click activity (usually by adding a special tag or tracking pixel to a form completion page), but often you’ll need to use a separate analytics system like Google Analytics to track post-click activity and conversions.

It’s important to track post-click activity but there are some caveats:

- If you are marketing to a niche audience, you won’t get many conversions from any given email send. That doesn’t mean your email sends are a failure! B2B sales and consideration cycles are often very long. You need to keep engaging the same audience over an extended period.

- Your emails have value even if a person doesn’t “convert”. Just seeing your company’s name in an email inbox, or reading some useful content in the email preview, will have a positive branding effect for many people in your list. Your email campaigns may help to convert them at a later date via a different touchpoint.

- There are many technical limitations to tracking a person’s digital journey. Some people might see your email on one device but decide to follow up using a different device, for example. You won’t see this value attributed to the email campaign in your conversion metrics.

So conversion and post-click email metrics are best used in a relative way – if one type of campaign gets twice as many downloads per email sent than another, it is likely to be twice as effective. Don’t rely on them as absolute measures of email ROI.

Summing it up

Email metrics are complex to understand and to use. I hope I’ve given you at least a flavour of the top priority metrics and some of the pitfalls to avoid. We’ll return to some of these themes in future blog posts.

If you have questions about B2B email metrics or need any other help with your B2B email marketing, please get in touch!